Want to know how smart companies are actually spending money?

We here at Mostly Metrics world headquarters love data. Like, love it. And Brex is cooking up something beautiful with The Brex Benchmark — real spend trends sourced from millions of monthly anonymized Brex transactions.

Here's what caught my eye: Startups are spending 2x more on Anthropic than OpenAI, but enterprises flip that script entirely. Meanwhile, Runway and Cursor are top tools for AI video and coding across segments. (Turns out, vibe coding is a budget line item now.)

This isn't survey data or self-reported nonsense. This is actual dollars flowing through actual companies building the future. The kind of intel that makes your next budget conversation way more interesting. Check out Brex’s data to see how your stack stacks up:

Software pricing and packaging has undergone a radical transformation since the early 1950s. And the clear through line is a changing unit of value and how it’s tracked.

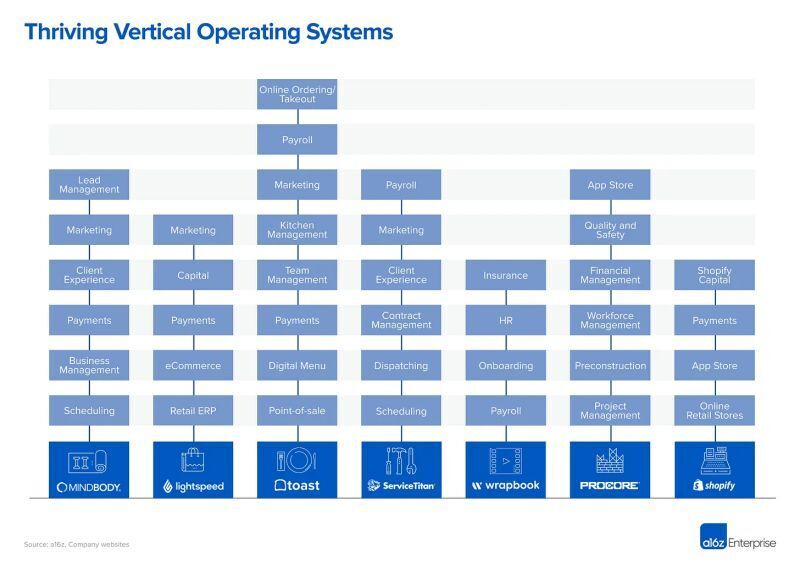

The industry has undergone six waves of change:

Hardware + Software Sold Together

Software Sold for Hardware

The ASPs (Application Service Providers)

Outsourced Infrastructure + SaaS

Usage Based Pricing

Hybrid

What you’ll notice as we go through the various waves of software is there’s always a segment that’s left behind, because it still remains the best way for them to do business. And that’s fine. But each new wave was created because the system wasn’t perfect for the vendor customer relationship.

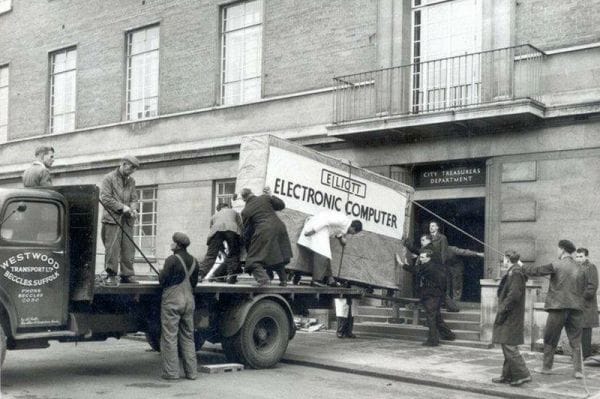

Computing was first commercialized in the 1950s. In the first wave, hardware and software were sold together. The software had to be for your specific model, not just your maker. And it came pre installed on your hardware.

Computing in those days were delivered in semi trucks.

It had to be installed on site and it could only be configured by whoever you bought it from. So the software salesman was really the hardware salesman. And most of the software just allowed you to build software on your computer for whatever you wanted to do. It was more about making it programmable; it was a choose your own adventure type of deal.

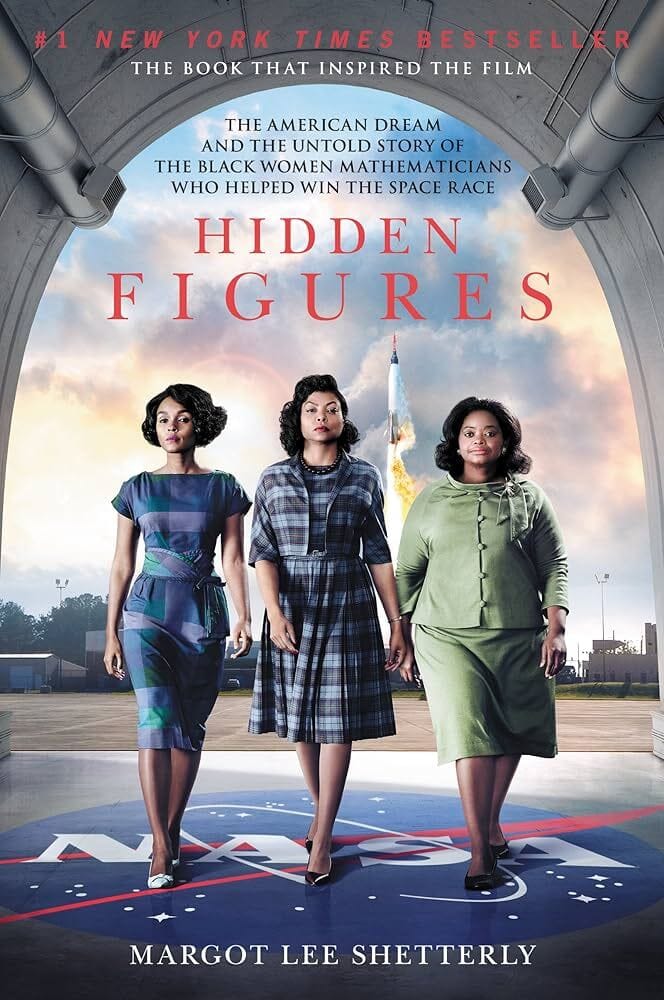

If you’ve ever seen the movie Hidden Figures, the women in that movie are learning how to program the IBM computer that’s delivered, trying to get the software to do something useful.

The metric for pricing was the hardware.

And there are still some vendors in this wave today, like GPS systems for the military.

But largely the actual delivery of software with hardware ended in 1969. There was a case against IBM that actually made it so it couldn’t be bundled. Even so, for decades after, and even today, there are pieces of software that come with your hardware, but it’s no longer the main way it’s sold.

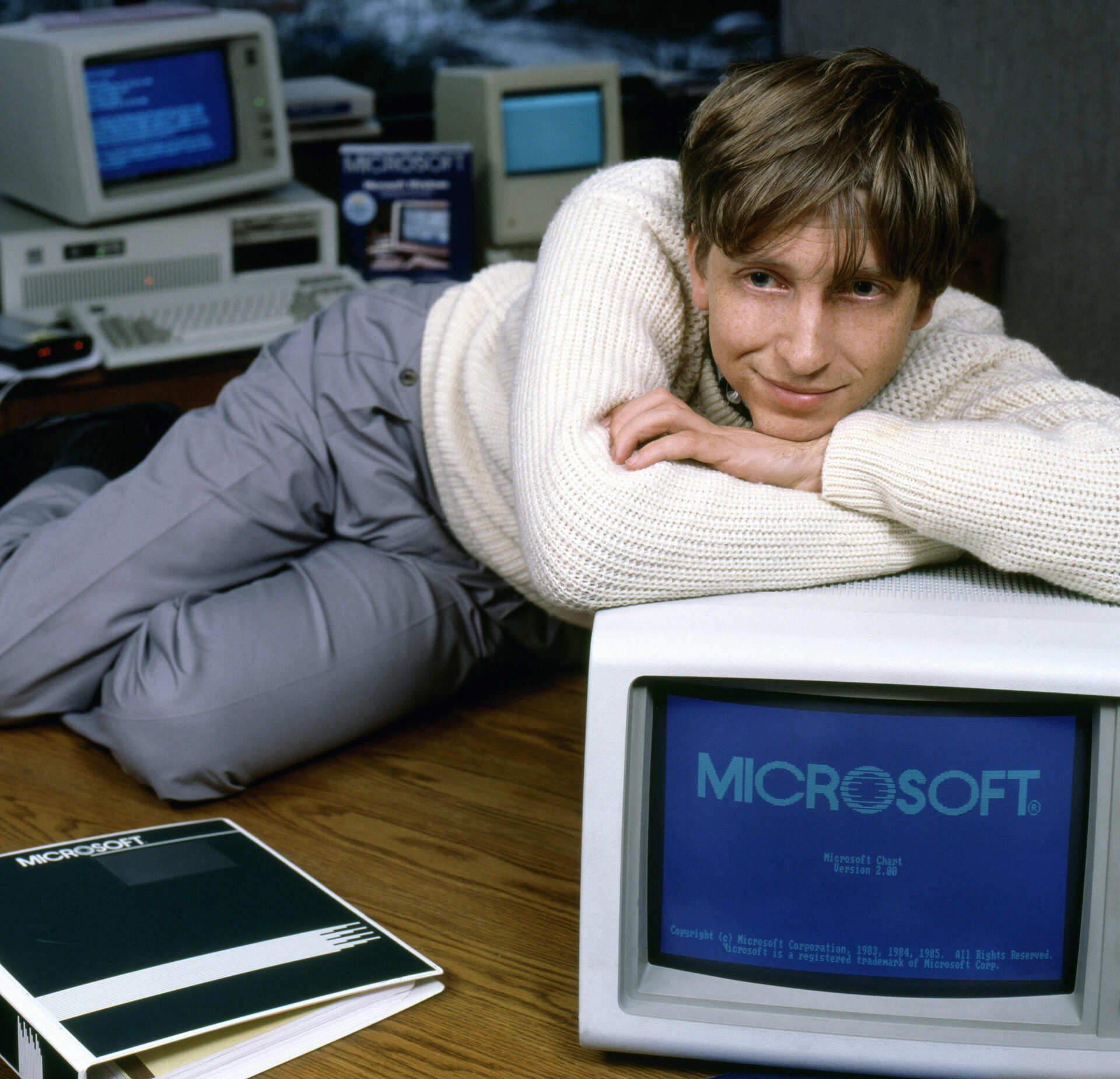

We start to break apart the hardware from the software in Wave 2. In Wave 2 you could buy software that would go on another company’s hardware. This is the wave MSFT capitalized on the best.

The pricing for this wave was called “Full Packaged Product”. And for every unit you bought you literally got a box. It came with a floppy disk and a manual. Eventually they’d put a CD instead of a floppy disk inside the box, but nonetheless, the term “shelfware” comes from this era - you’d have all these boxes in a closet on a shelf.

Eventually companies said for the love of everything good, please stop sending me all these boxes. MSFT moved to a certificate of authenticity that looked like a bearer bond with a hologram on it rather than pallets full of software.

And a relic of this era: in software today we still often refer to the thing we are selling as a SKU, a stock keeping unit, because customers expected to receive something. And they tracked the boxes as assets - it’s easier to track a physical asset than an ephemeral one.

MSFT came up with a successful arrangement called the Enterprise Agreement where you would tell them how many PCs you had. And they wouldn’t ship you anything; you’d just get one document. The innovation here was the counting.

And keep in mind that there isn’t a 1x1 relationship yet between people and computers. There’s no reason for people in the warehouse or trucks to have a full time PC yet.

On Prem goes to the customer and whatever the customer does with it, they do. It’s on their servers, their desktops. And it’s sold in a perpetual way.

So to take a step back… if in the hardware phase the pricing metric was the hardware, in this phase the metric was originally the box, then the paper, and then the proxy - which was the number of PCs.

Then we have a shorter Third Wave come along in the middle of the 1990s - the Application Service Provider era. People began to question if maintaining their own servers was still the best way to go about business. Rackspace is still around, they serve a niche. But they are one of the last ASPs. Customers said I need to worry less about deploying the actual application, and make sure when employees come to work they can just open up excel and use it.

But the unit economics nor the strength of the internet were there yet. We’re still using unstable internet technology, which creates a lot of downtime across the board. And the unit economics didn’t really work for the customers who still needed a few servers internally to run other stuff. It’s expensive.

The ASPs kind of vanished or crashed all at once with the internet bubble in 2001 when we learned we didn’t know as much about internet economics as we thought we did.

OK, now we’re getting real.

We have a number of developments in the mid to late 2000’s that bring the Fourth wave along, outsourced infrastructure as a service (IaaS) and software as as service (SaaS)

First, Amazon launched elastic computing in the late 2000’s. The idea was “hey we aren’t using this all the time, we have seasonal ups and downs, is there a way for people to pay us to use the infrastructure we aren’t using seasonally.”

So they picked the best parts of the ASP model and went at it again.

And around the same time in 1999 we have Salesforce, who’s born in the cloud.

This is the start of the software as a service era.

And then in 2006 you have this other thing coming forward - Google apps. They said, “hey you don’t need to buy that expensive heavy weight MSFT software for every user any more, we have this free light weight stuff you can deliver over the internet.”

It wasn’t that great yet, but hey, it’s a start.

The metric here for AWS - they are not selling you a server - they are selling you compute. They are abstracting the physical server up to a different unit. We can sell a megabyte of storage.

And similarly, Salesforce is saying we can license each individual user. Google followed that same path.

So it took us plus 50 years but we got to the SaaS era, where by 2009 everyone thinks the SaaS wave is real, not just a fad.

And investors love it. How many of you measure ARR as a north star metric?

The ARR view was much more predictable, and they knew the math resulted in a larger customer lifetime value.

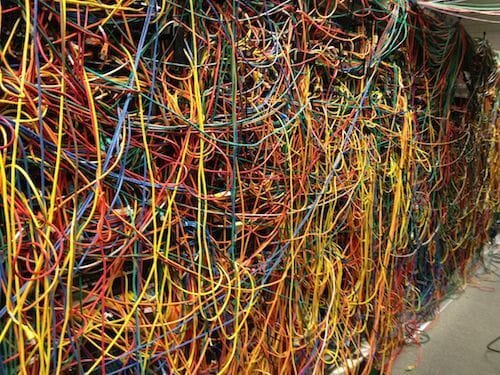

But here’s the rub for vendors selling software: Everyone feels better we don’t have all this extra infrastructure and these massive server rooms. But in the SaaS model you still have shelfware. It’s just not physically on a shelf. And that’s actually worse. Because you can’t see it anymore or really know who you are spending it on. You can’t walk down the hallway and accurately gauge it.

CFOs start to push on vendors to say I want to buy a different way.

And this brings The Fifth wave - Usage Based Pricing. The pendulum is now seemingly swinging back to the customer.

Like I said, the push originates in the finance department. They say “hey, IT has grown to become one of my largest cost centers, but I can no longer walk around and point to who’s using it. All this money is going to per seat.”

So we only want to pay for what we use.

But here’s the new rub - this doesn’t work for vendors when customers want to buy like electricity. You start to hear the dirty word “pricing optimization” pop up in earnings calls.

In a rising tide situation, usage is the best. The economy is growing, all of my customers are growing, I entice them with a low cost offer to put stuff in the cloud, and data and usage grows.

But in a receding tide, it’s horrible. As customers shrink, I shrink. And the revenue becomes wavy and it’s hard to make investments in my roadmap.

And then what happens is the customers actually come back and say wait this isn’t actually what I want. I was wrong. I thought I knew what I was signing up for but this thing is uncapped. I have these overages.

So now we have tensions between customer and vendor.

It isn’t good for anyone when a customer churns out the top.

And that’s how we get to The Sixth Wave, where the puck is going today.

We absolutely have a lot of companies still in the fourth wave - seat based SaaS or IaaS, as well as in the fifth wave - usage based. This is an attempt to blend the best of both worlds. A trend we’ve seen throughout all these phases: let’s try to take what worked, and drop what didn’t.

The hybrid model says “you’ll pay me so much per month for this resource. And if you have overages we’ll have a usage model on top of that to compensate.” I get to have my cake and eat it too.

And at the same time, the customer can predict their spend to a higher degree of certainty and understand what they’ve committed to.

But it also brings a pain point - it’s hard to explain, and often hard to link to a value metric.

Top Pain Points and Solutions

So we find ourselves at a unique inflection point where many vendors are jumping on this sixth wave, a hybrid model. And at the same time we also have a technology where both customers and vendors have limited experience pricing and packaging it - AI.

Let’s talk about the top pain points today and solutions we can identify from this trip through history.

First Pain point: It takes too long to explain to potential customers how we charge, and it’s slowing down deal cycles

The whole point of pricing is to make money. It’s bad when the pricing construct actually slows down revenue.

The Solution: You need to count in a way that makes sense for the customer.

You need to count something that people understand. It doesn’t matter if you’ve come up with this complicated thing that you understand on your end. You are being selfish.

The value metric for a warehouse ERP is how many pieces do I have in the warehouse. Don’t invent your own convoluted measure in a backroom without talking to customers.

Here’s an example: Oracle proposed a big sale to a city in the Netherlands. The city said we will never buy this, it’s far too expensive

Oracle went back to the drawing board and said “what if we charge you x amount per citizen per year”

This was brilliant because the municipality thought about everything in terms of citizens already - their tax base, their hospital base, every service they had was tied back to citizens

And by counting how they counted, they won the deal.

And by the way, it was the same total amount as before.

Second Pain point: OK, I sold the deal, but now the users of my software can’t communicate the bill to my accounting department

This is at the other end of the problem I just explained. First we spoke about winning a deal at the front end of the house. Now we’re talking about avoiding churn because the back end of the house doesn’t get it.

The Solution: Associate costs with activities to provide clarity to both accounting and users

A bill that says $400 and you don’t know what that’s really for isn’t good business

Break it out in a way that says “hey it’s this much for storage, this much for compute etc.” so the accounting department and the users of the software can have an educated conversation

As an FP&A person at heart, this means a lot to my financial forecasting. It’s hard to do it in a black box.

The lesson here is we need to rely on systems

You need to bill your customer in a way that illuminates, not confuses

Third Pain Point: How do I fairly price AI in a way that covers my costs and also provides value to my customers

Unlike traditional SaaS, where scaling comes with near-zero marginal costs, AI flips the equation on it’s head

AI isn’t like traditional software. It’s expensive to run. Scaling AI isn’t just a matter of adding more servers—it’s about making significant investments in infrastructure, energy, and data.

The first question to ask yourself:

Is this AI product a standalone product, like ChatGPT or Perplexity, or an add on to an existing product, like Canva or Notion?

If it’s a standalone product, charging a per-user fee is still the simplest way to sell a product and the simplest way for users to buy one. I pay $20 bucks per month for ChatGPT. Easy on-ramp.

If it’s augmenting an existing product, you can either bundle it as part of an existing package to increase overall sales of that package, or you can make it

a) a higher priced package tier (pay $5 more for AI features) or b) an a la carte add on (pay $5 more for this AI module on top of what you have)

Now, if we learn anything from the first two pain points we went through today, you’ll want to avoid coming up with some confusing usage based pass through costs, and just make it simple for people to buy. When in doubt, simple wins.

The other lesson here is to listen to what users are counting - we are still very much in the early innings - there are only a few companies out there, like Intercom, who have made some pricing innovations with their AI products by charging based on a specific value metric

Intercom made a splash with pricing for their AI support chatbot (Fin). The product is priced at $0.99 per successful resolution — directly aligning the price paid with the value received.

But don’t get arrogant and think you are there if you aren’t yet

Not only could you slow down revenue, our first pain point

But more importantly, you could also screw up your cost structure

My advice would be to keep it stupid simple until you talk to enough customers to make a move that clearly links to a value metric. A value metric that you can also forecast your own costs based on. And to realize that whatever you put out now may largely change later. That’s OK

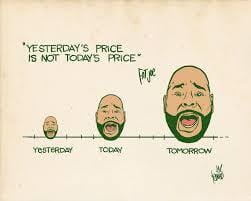

In the words of my friend and pricing expert :

The name of the game right now seems to be: (1) make pricing predictable and (2) don't make it a barrier to using your product.

You’ll see there’s a constant search for clarity by both sides throughout history.

As a vendor I want you as a client to understand the value I’m delivering, because if you understand that you’ll be more willing to pay and keep me long term.

And as a customer I want to understand the cost of what’s being delivered. I only want to pay for the people who are using the product and the value they are getting.

And that only comes through clarity and mutual trust. Those are the two tenants of any pricing construct, in any era.

Run the Numbers Podcast

I was joined by Chris Greiner, CFO of Zeta Global and former CFO of IBM’s analytics division, where he worked on AI during the development of Watson. He’s had a front-row seat to the evolution of AI across industries. He’s also on a 15-quarter streak of beat-and-raise performance in his current role. Chris breaks down:

His “Closest to code, closest to customer” philosophy

How to determine whether a company's data advantage is real or just storytelling

What he learned from cataloging every task in the finance org, and why some things are better left unautomated

What's on his personal IPO pre-flight checklist

How and why Zeta Global tracks daily revenue

Looking for Leverage

Every CEO has three core jobs:

Run a good company (Operations)

Tell a good story (Investor Relations)

Deploy capital effectively (Capital Allocation)

Most CEOs focus 80% of their time on the first two. Yet it's the third, capital allocation, that often has the highest leverage and the least guidance.

This is a deep dive into capital allocation, including a discussion on sources and usages. Hint: Not all are equal.

Quote I’ve Been Pondering

“When it come down to it, every Stringer Bell just needs an Avon who won’t sweep it under the rug.”

- F.I.C.O by Clipse